Some of you out there are still stuck on old deployment workflows that drop software directly onto shared hosts. Maybe it’s a personal thing that you just don’t have the energy to maintain particularly well. Maybe it’s a service at work stuck without any dedicated owner or maintenance resources that keeps limping along.

This post is a call to action for doing the minimum possible work to get it into a container, and to do that transition badly and quickly. I’ve done it for a bunch of minor things I maintain and it’s improved my life greatly; I just re-build the images with the latest security updates every week or so and let them run on autopilot, never worrying about what previous changes have been made to the host. If you can do it1, it’s worth it.

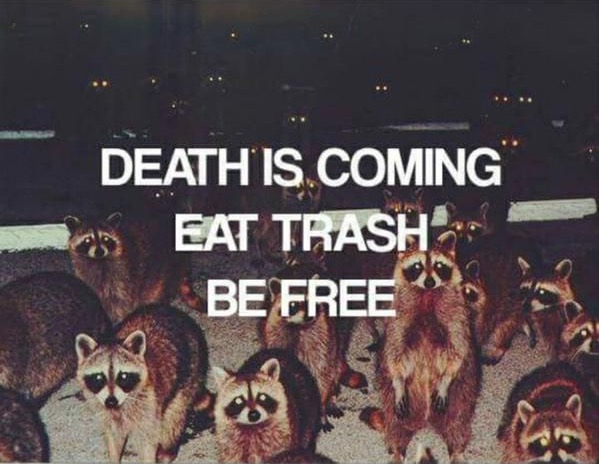

Death is Coming

Your existing mutable infrastructure is slowly decaying. You already know

that one day you’re going to log in and update the wrong package and it’s gonna

blow up half of the software running on your box. Some .so is going to go

missing, or an inscrutable configuration conflict will make some network port

stop listening.

Either that or you’re not going to update religiously, and eventually it’ll get commandeered by cryptocurrency miners. Either way, your application goes down and you do a lot of annoying grunt work to get it back.

These boxes won’t survive forever. You’ve gotta do something.

Eat Trash

You don’t need to follow the daily churn of containerization “best practices”

in order to get 95% of the benefit of containers. The huge benefit is just

having a fully repeatable build process that can’t compromise your ability to

boot or remotely administer your entire server. Your build doesn’t have to be

good, or scalable. I will take 25 garbage shell scripts guaranteed to run

isolated within a container over a beautifully maintained deployment system

written in $YOUR_FAVORITE_LANGUAGE that installs arbitrary application

packages as root onto a host any day of the week. The scope of potential harm

from an error is orders of magnitude reduced.

Don’t think hard about it. Just pretend you’re deploying to a new host and

manually doing whatever faffing around you’d have to do anyway if your existing

server had some unrecoverable hardware failure. The only difference is that

instead of typing the commands to do it after an administrative root@host#

prompt on some freshly re-provisioned machine, you type it after a RUN

statement in a Dockerfile.

Be Free

Now that you’ve built some images, rebuild them, including pulling new base

images, every so often. Deploy them with docker run --restart=always ...

and forget about them until you have time for another round of security

updates. If the service breaks? Roll back to the previous image and worry

about it later. Updating this way means you get to decide how much debugging

effort it’s worth if something breaks in the rebuild, instead of inherently

being down because of a bad update.

There. You’re done. Now you can go live your life instead of updating a million operating system packages.

-

Sadly, this advice is not universal. I certainly understand what it’s like to have a rat king of complexity containing services with interdependencies too complex to be trivially stuffed into a single container. ↩